regularization machine learning mastery

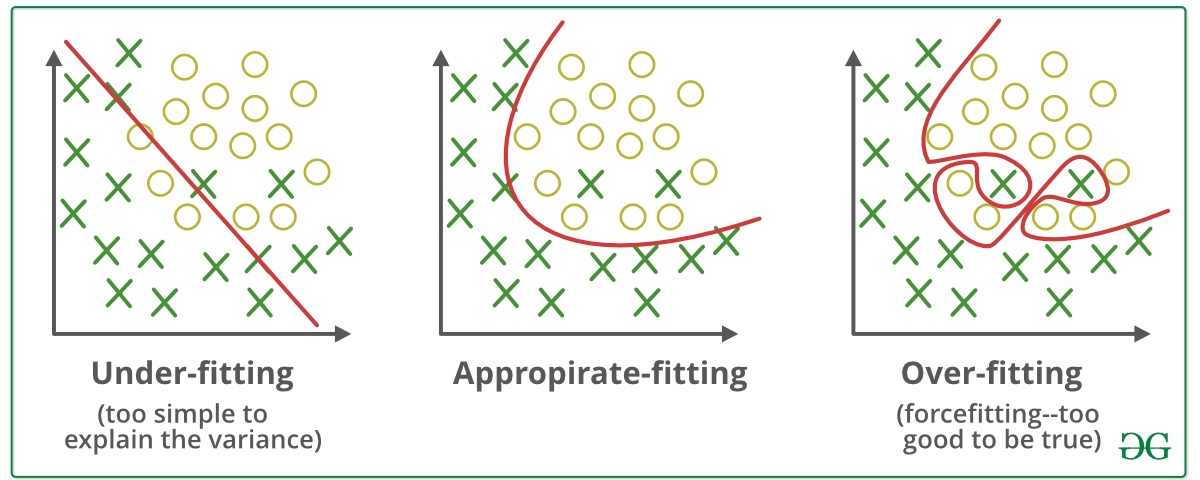

It is a form of regression that shrinks the coefficient estimates towards zero. Therefore we can reduce the complexity of a neural network to reduce overfitting in one of two ways.

Regularisation Techniques In Machine Learning And Deep Learning By Saurabh Singh Analytics Vidhya Medium

Regularization works by adding a penalty or complexity term to the complex.

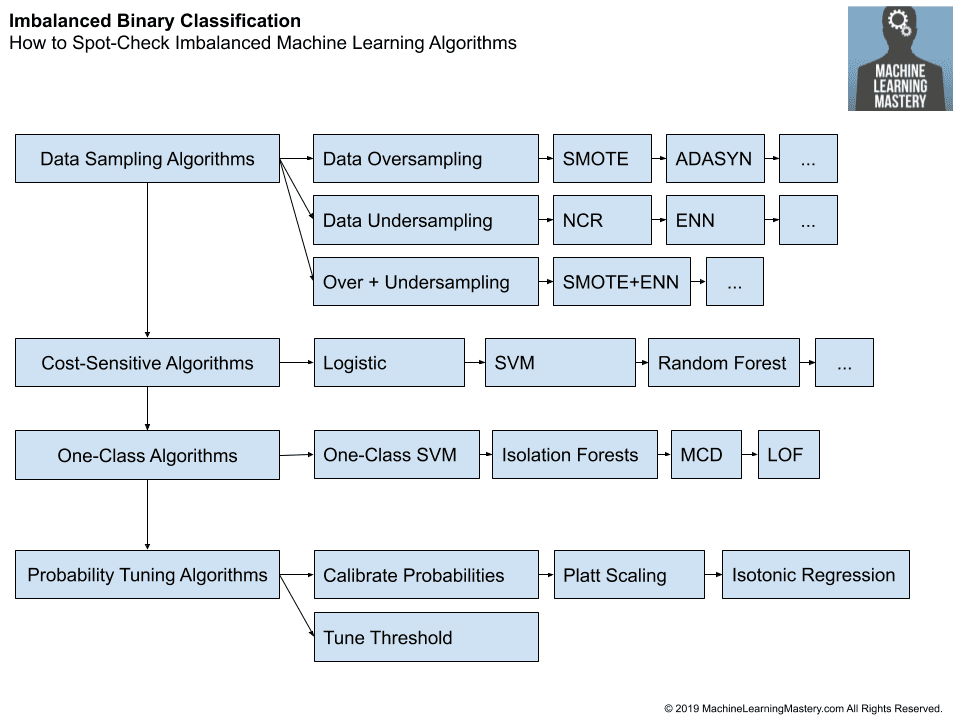

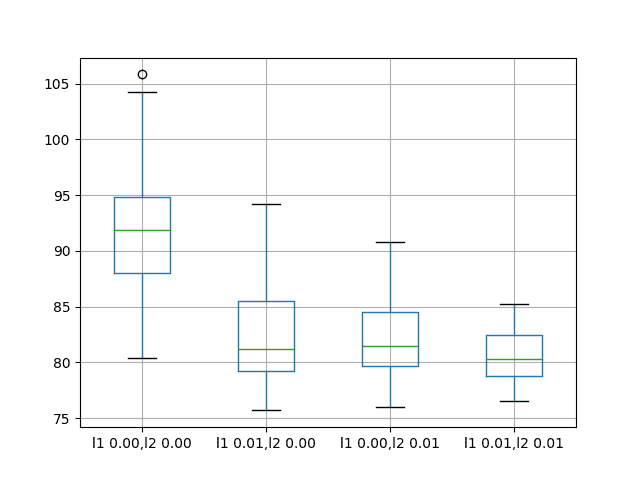

. A regression model that uses L1 regularization technique is called Lasso Regression and model which uses L2 is called Ridge Regression. In other words this technique forces us not to learn a more complex or flexible model to avoid the problem of. Based on the approach used to overcome overfitting we can classify the regularization techniques into three categories.

You should be redirected automatically to target URL. The key difference between these two is the penalty term. You should be redirected automatically to target URL.

Ridge regression adds squared magnitude of coefficient as penalty term to the loss function. It is often observed that people get confused in selecting the suitable regularization approach to avoid overfitting while training a machine learning model. Regularization is one of the basic and most important concept in the world of Machine Learning.

You should be redirected automatically to target URL. You should be redirected automatically to target URL. In the case of neural networks the complexity can be varied by changing the.

Among many regularization techniques such as L2 and L1 regularization dropout data augmentation and early stopping we will learn here intuitive differences between L1 and L2. It is one of the most important concepts of machine learning. Regularization It is a form of regression that constrains or shrinks the coefficient estimating towards zero.

Types of Regularization. In this way it limits the capacity of models to learn from the noise. Explaining Regularization in Machine Learning.

Everything You Need to Know About Bias and Variance Lesson - 25. Change network complexity by changing the network parameters values of weights. Each regularization method is marked as a strong medium and weak based on how effective the approach is in addressing the issue of overfitting.

Ad Browse Discover Thousands of Computers Internet Book Titles for Less. Regularization is a form of constrained regression that works by shrinking the coefficient estimates towards zero. This technique prevents the model from overfitting by adding extra information to it.

Lets look at this linear regression equation. Change network complexity by changing the network structure number of weights.

Machine Learning Mastery With Weka Pdf Machine Learning Statistical Classification

What Is Regularization In Machine Learning

Hyperparameters Introduction Search Youtube

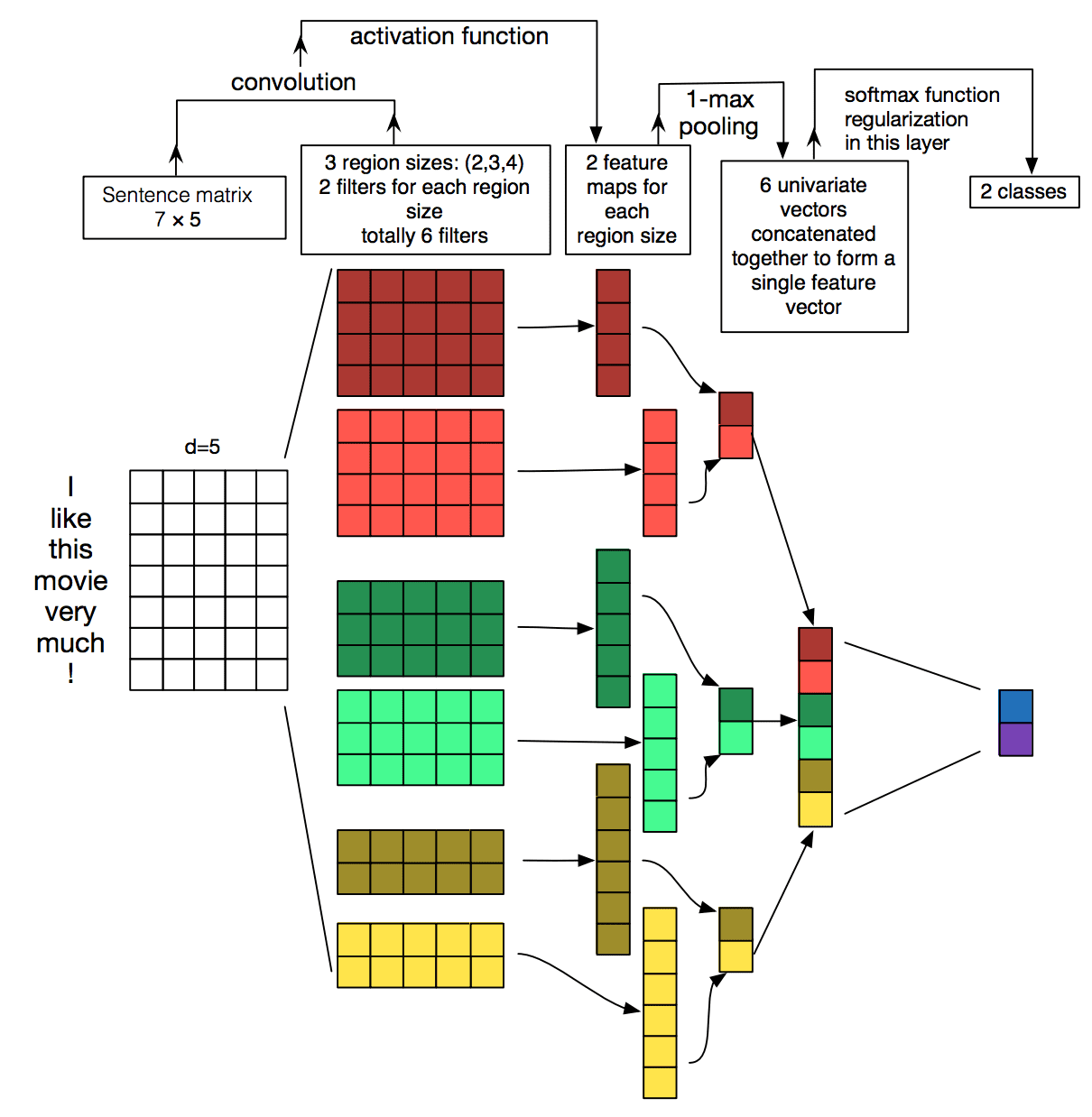

Best Practices For Text Classification With Deep Learning

Linear Regression For Machine Learning

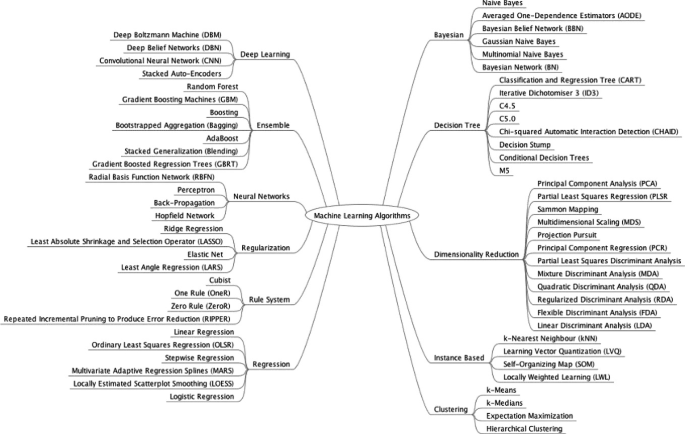

A Tour Of Machine Learning Algorithms

![]()

Start Here With Machine Learning

Become Awesome In Data February 2017

Regularization In Machine Learning Simplilearn

Regularization In Machine Learning And Deep Learning By Amod Kolwalkar Analytics Vidhya Medium

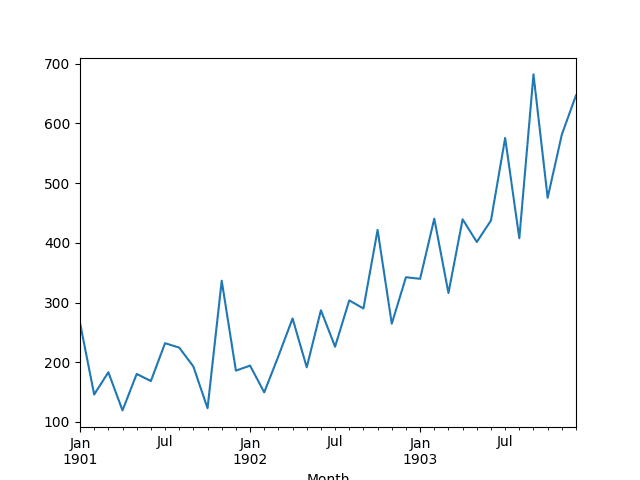

Weight Regularization With Lstm Networks For Time Series Forecasting

Overview Of The Artificial Intelligence Methods And Analysis Of Their Application Potential Springerlink

![]()

Machine Learning Mastery Workshop Enthought Inc

Regularization In Machine Learning Simplilearn

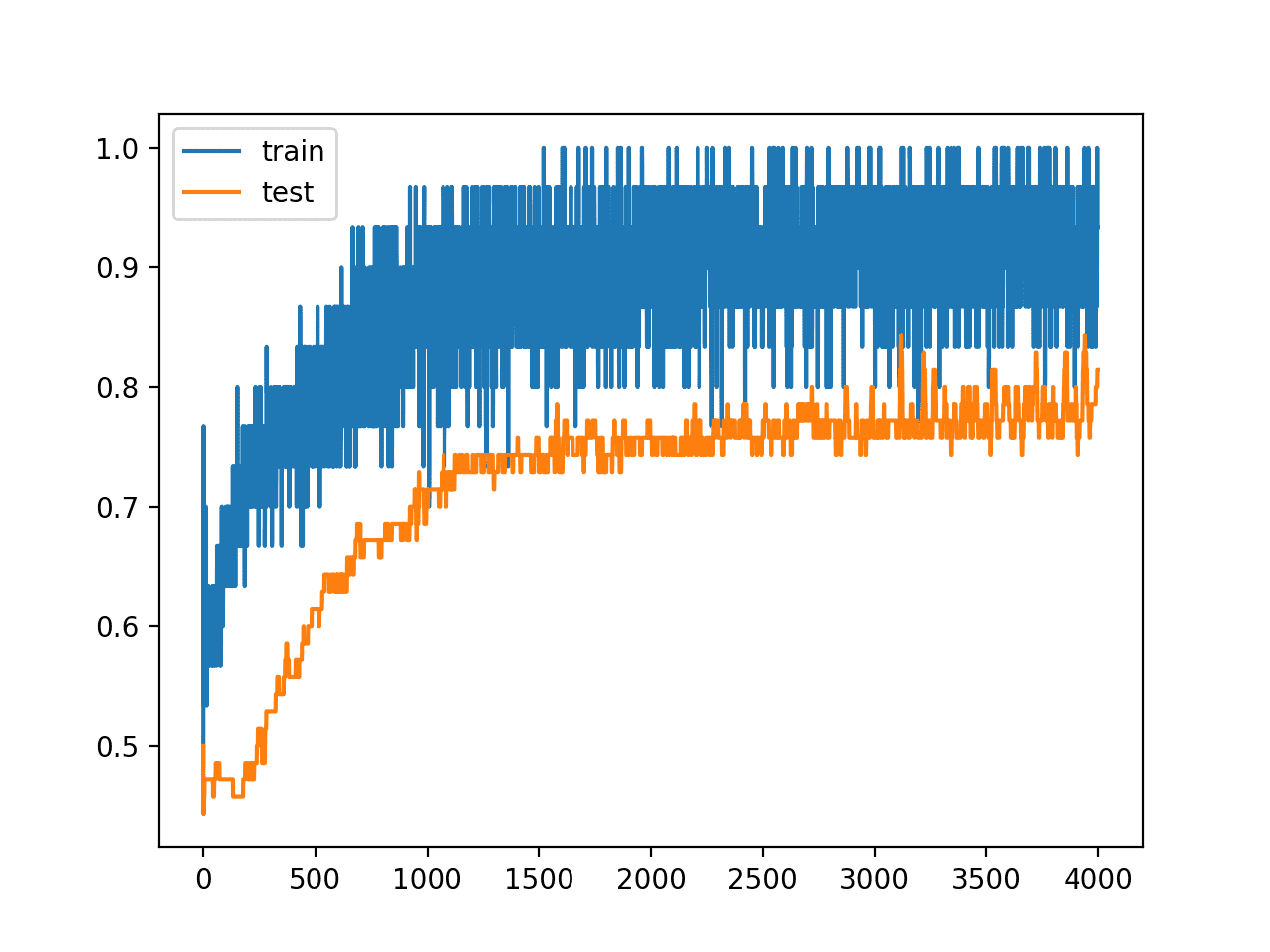

Day 3 Overfitting Regularization Dropout Pretrained Models Word Embedding Deep Learning With R

Comprehensive Guide On Feature Selection Kaggle

A Gentle Introduction To Dropout For Regularizing Deep Neural Networks

Weight Regularization With Lstm Networks For Time Series Forecasting