regularization machine learning meaning

It has arguably been one of the most important collections of techniques fueling the recent machine learning boom. Regularization is a concept much older than deep learning and an integral part of classical statistics.

What Is Regularizaton In Machine Learning

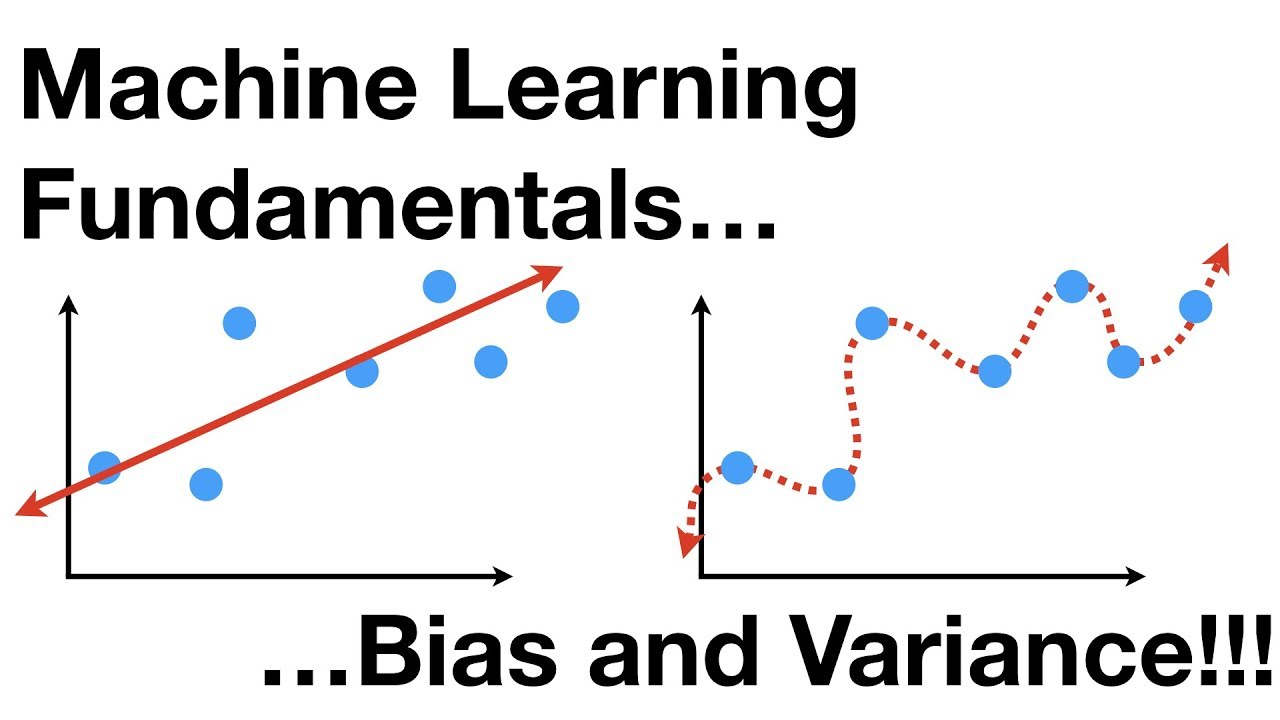

Regularization reduces the model variance without any substantial increase in bias.

. It is not a complicated technique and it simplifies the machine learning process. Regularization is one of the techniques that is used to control overfitting in high flexibility models. The cheat sheet below summarizes different regularization methods.

Solve an ill-posed problem a problem without a unique and stable solution Prevent model overfitting. Regularization helps to solve the problem of overfitting in machine learning. In mathematics statistics finance 1 computer science particularly in machine learning and inverse problems regularization is a process that changes the result answer to be simpler.

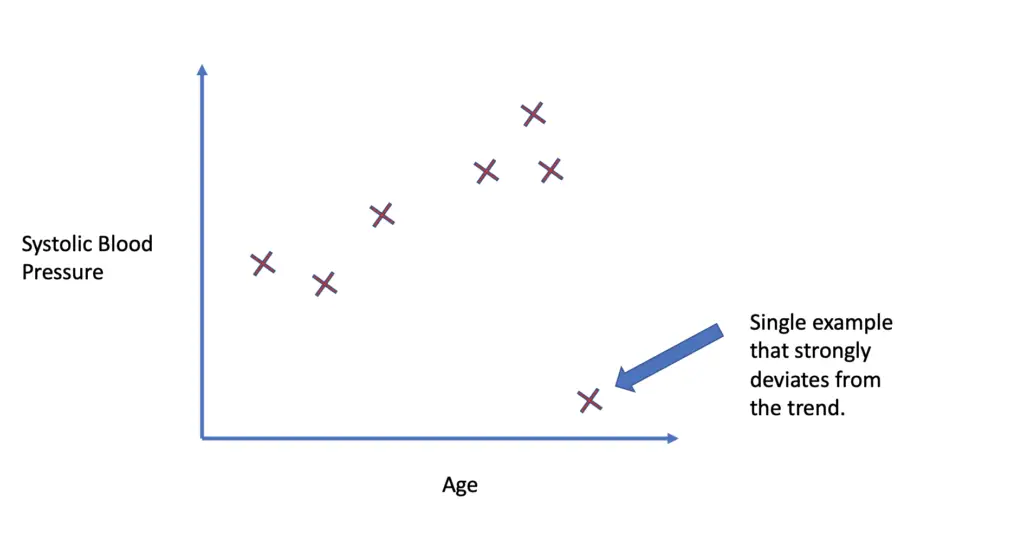

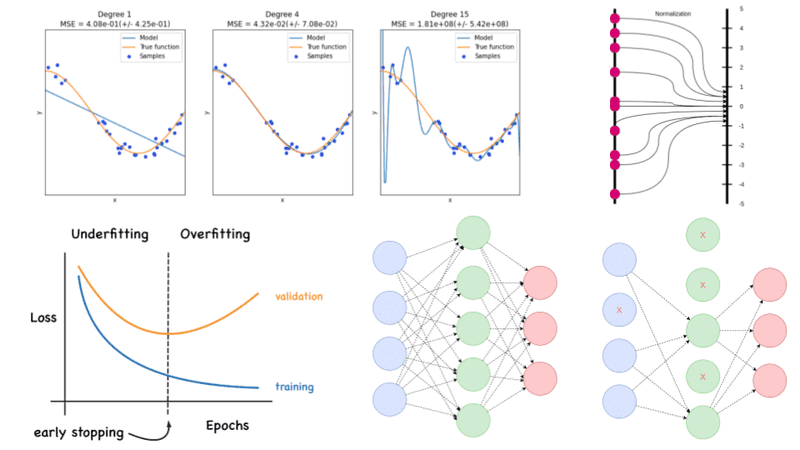

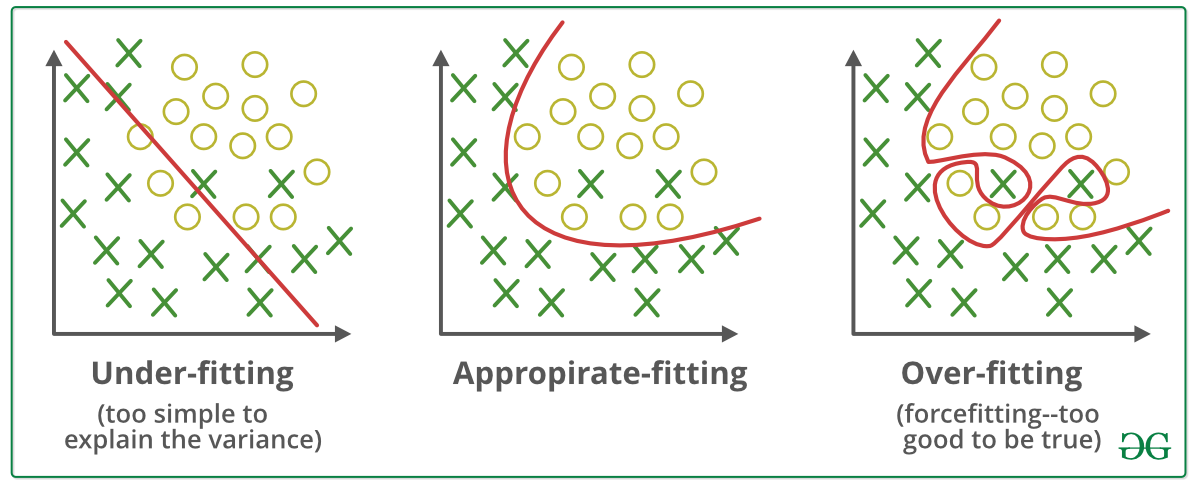

It is possible to avoid overfitting in the existing model by adding a penalizing term in the cost function that gives a higher penalty to the complex curves. Regularization can be implemented in multiple ways by either modifying the loss function sampling method or the training approach itself. Poor performance can occur due to either overfitting or underfitting the data.

The ways to go about it can be different can be measuring a loss function and then iterating over. The model will not be. There are mainly two types of regularization.

Overfitting is a phenomenon where the model accounts for all of the points in the training dataset. Regularization is essential in machine and deep learning. This penalty controls the model complexity - larger penalties equal simpler models.

It is one of the most important concepts of machine learning. Regularization helps us predict a Model which helps us tackle the Bias of the training data. In machine learning regularization problems impose an additional penalty on the cost function.

How well a model fits training data determines how well it performs on unseen data. Of course the fancy definition and complicated terminologies are of little worth to a complete beginner. In other words this technique forces us not to learn a more complex or flexible model to avoid the problem of.

Regularization methods add additional constraints to do two things. The major concern while training your neural network or any machine learning model is to avoid overfitting. Still it is often not entirely clear what we mean when using the term regularization and there exist several competing.

Regularization is one of the most important concepts of machine learning. In other words this technique discourages learning a more complex or flexible model so as to avoid the risk of overfitting. It is a form of regression that shrinks the coefficient estimates towards zero.

Setting up a machine-learning model is not just about feeding the data. When you are training your model through machine learning with the help of artificial neural networks you will encounter numerous problems. Sometimes the machine learning model performs well with the training data but does not perform well with the test data.

Regularization is used in machine learning as a solution to overfitting by reducing the variance of the ML model under consideration. Instead of beating ourselves over it why not attempt to. It means the model is not able to predict the output when.

The concept of regularization is widely used even outside the machine learning domain. Regularization techniques are used to increase performance by preventing overfitting in the designed model. In general regularization involves augmenting the input information to enforce generalization.

This technique prevents the model from overfitting by adding extra information to it. It is often used to obtain results for ill-posed problems or to prevent overfitting. Regularization in Machine Learning.

In addition there are cases where it is used to reduce the complexity of the model without decreasing the performance. It is a form of regression that constrains or shrinks the coefficient estimating towards zero. Regularization is a technique which is used to solve the overfitting problem of the machine learning models.

It is a technique to prevent the model from overfitting by adding extra information to it. The regularization techniques prevent machine learning algorithms from overfitting. While regularization is used with many different machine learning algorithms including deep neural networks in this article we use linear regression to.

Designing a simpler smaller-sized model while maintaining the same performance rate is often important where. Overfitting is a phenomenon which occurs when a model learns the detail and noise in the training data to an extent that it negatively impacts the performance of the model on new data.

Regularization In Machine Learning Regularization In Java Edureka

What Is Regularizaton In Machine Learning

Regularization In Machine Learning Programmathically

Mindspore Architecture And Mindspore Design Huawei Enterprise Support Community Meta Learning Enterprise Data Structures

What Is Regularization In Machine Learning Techniques Methods

Machine Learning Fundamentals Bias And Variance Youtube

L2 Vs L1 Regularization In Machine Learning Ridge And Lasso Regularization

Regularization Techniques For Training Deep Neural Networks Ai Summer

What Is Regularizaton In Machine Learning

Regularization In Machine Learning Geeksforgeeks

A Tour Of Machine Learning Algorithms

Regularization In Machine Learning Simplilearn

Regularization In Machine Learning Simplilearn

Regularization In Machine Learning Simplilearn

Machine Learning Regularization In Simple Math Explained Data Science Stack Exchange

Difference Between Bagging And Random Forest Machine Learning Supervised Machine Learning Learning Problems

A Simple Explanation Of Regularization In Machine Learning Nintyzeros